In an era where unmanned aerial systems (UAS) are transforming defense and commercial operations, the ability to navigate effectively in GPS-denied environments has become a critical requirement. At OKSI, we’ve developed OMNInav, a cutting-edge solution that delivers precise and reliable navigation without relying on GPS. This article highlights the innovative features of OMNInav and its role in addressing the challenges of modern UAS missions.

Overview of OMNInav

OMNInav is a core component of the OMNISCIENCE drone autonomy framework, delivering precise real-time positional awareness to enable autonomous operations in GPS-denied environments. By integrating seamlessly with popular flight stacks like PX4 and ArduPilot, OMNInav replaces GPS input, allowing the autopilot to handle navigation with accurate positional data. It supports a wide range of unmanned airframes, including rotary and fixed-wing aircrafts, and VTOL systems.

Key Features:

Modular Design: Seamless integration with existing systems

Compact Hardware: Low SWaP, 70x50x50 mm, weighing 300 grams, and consuming only 5 watts

Advanced AI Models: AI-based satellite registration for cross-modality navigation, supporting visible and infrared imagers

Flexible Deployment: Available as a software-only solution or combined hardware and software package

Understanding GPS-Denied Navigation Methods: Weaknesses of Single-Modality Solutions

OMNInav addresses limitations in traditional GPS-denied navigation methods by integrating multiple advanced techniques. Below is adetailed overview of commonly usedvisual navigation methods, their drawbacks, and how OMNInav overcomes these challenges:

1. Optical Flow

Definition: Tracks pixel motion in images to estimate relative movement

Advantages: Cost-effective and simple to implement

Figure 1: Illustration of optical flow in UAS navigation. The image consists of three parts: (1) a real-world scene from the UAS camera with overlayed optical flow vectors illustrating motion detection, (2) a plot representing optical flow data in the context of navigation, and (3) a diagram showing how the UAS’s field of view (FOV) changes with tilt angles, depicting the relationship between velocity (VT) and optical flow velocity (VOF)

Drawbacks: Optical flow fails at high altitudes where the ground appears static, and in environments with poor lighting or motion blur

Real-World Example: In Ukraine, drones that navigate smoke-covered battlefields cannot rely on optical flow alone due to obscured visuals and erratic movement caused by explosions or turbulence

OMNInav’s Solution: Incorporates infrared imaging and AI models to handle obscured or low-contrast environments, enabling reliable navigation even with degraded visuals

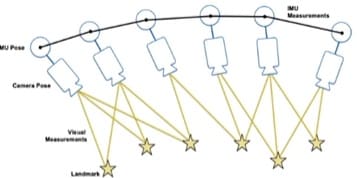

2. Visual Inertial Odometry (VIO)

Definition: Combines camera and inertial sensor data to estimate motion and position

Advantages: Reduced drift compared to optical flow alone

Drawbacks: VIO struggles with scale inaccuracies during initialization and becomes unreliable during rapid accelerations or turns

Figure 2: Depiction of Visual-Inertial Odometry (VIO). The image illustrates how a UAS combines data from its camera (camera pose and visual measurements) with inertial measurements from the IMU (inertial measurement unit). Visual landmarks are tracked across successive frames, enabling the system to estimate the UAS’s pose and trajectory by fusing visual and inertial data.

Real-World Example: Urban drones that performreconnaissance in Kyiv face sudden accelerations and turns while dodging obstacles, causing VIO to lose accuracy quickly

OMNInav’s Solution: Combines VIO with SLAM (below) to continuously recalibrate and reset drift using AI-based feature matching

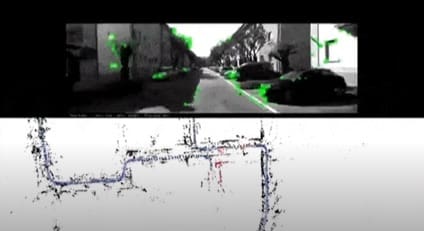

3. Simultaneous Localization and Mapping (SLAM)

Definition: Builds maps of the environment while simultaneously tracking the vehicle’s position within the map

Advantages: Provides accurate navigation in complex, long-term missions

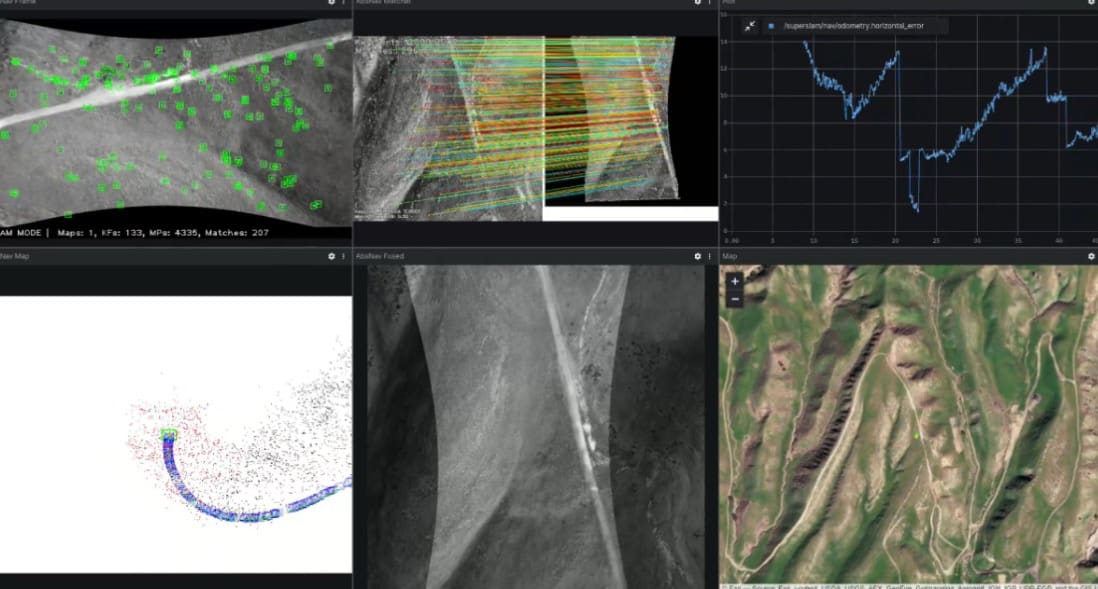

Figure 3: Simultaneous Localization and Mapping (SLAM) in action. The top panel shows a UAS’s camera view with detected visual features highlighted in green, while the bottom panel illustrates a real-time map of the environment generated by the SLAM algorithm. The map includes key structural features and demonstrates loop closure, ensuring accurate trajectory estimation and long-term consistency.

Drawbacks: Computationally expensive and fails in environments with rapidly changing landmarks (e.g., destroyed urban environments)

Figure 4: Example of map-based feature matching for position correction and drift reset. The image illustrates a UAS using feature matching to align its live sensor data (left) with a pre-stored map (right). Blue lines represent matched features between the two datasets, enabling precise localization and correction of positional drift over time.

Real-World Example: Drones that survey areas of urban destruction in Ukraine cannot rely on traditional SLAM due to rapidly changing landmarks and terrain

OMNInav’s Solution: Augments SLAM with AI-drivenfeature matching (below) to adapt to outdated or dynamically changing maps

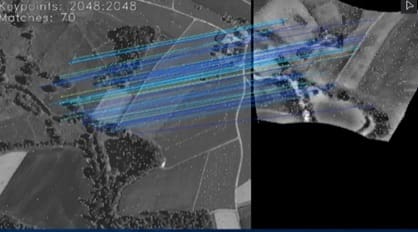

4. Feature-Based Localization

Definition: Uses pre-stored maps and landmarks or patterns to determine absolute position and correct drift

Advantages: Provides drift-free global positioning

Drawbacks: Requires pre-existing, accurate maps and fails in environments with limited recognizable landmarks; itprovides lower-frequency updates

Real-World Example: In rural farmland, where terrain changes seasonally (e.g., from plowed fields to snow cover), feature-based systems cannot adapt dynamically

OMNInav’s Solution: Leverages AI models trained on multi-year satellite imagery to handle seasonal and environmental changes, ensuring continuous adaptability

5. Military-Grade Navigation Systems

Definition: Advanced systems used in military applications, often leveraging custom hardware and complex algorithms

Advantages: Highly accurate and reliable in GPS-denied environments

Drawbacks: These systems are prohibitively expensive, bulky, and often proprietary, making them unsuitable for broader commercial applications or cost-sensitive defense missions

Real-World Example: High-end inertial navigation systems (INS) used in military drones provide reliable navigation in GPS-jammed environments but require extensive calibration and are not viable for smaller, lower-budget UAS operations

OMNInav’s Solution: Provides comparable reliability through advanced AI-driven navigation at a fraction of the cost, with flexible hardware and software options suited for both military and commercial platforms

OMNInav’s Innovative and Multi-Modality Approach

OMNInav bridges the technology gaps of traditional navigation methods by combining multiple advanced techniques into a unified, multi-modal system. By integrating SLAM for precise navigation, AI-driven feature matching for global localization, and robust sensor fusion, OMNInav eliminates the weaknesses of single-modality approaches. This innovative design ensures drift-free, accurate navigation across diverse flight profiles, making it ideal for both commercial and defense applications.

Key Features:

• SLAM for Precise Navigation: Creates detailed maps and tracks positions in real-time

• AI-Driven Feature Matching: Provides global localizationby matching observed features to stored datasets

• Robust Sensor Fusion: Adapts to dynamic environments by integrating inputs from multiple imaging modalities, including visible, near-infrared, and thermal sensors

Addressing Real-World Challenges

OMNInav’s capabilities excel in overcoming the toughest navigation challenges in GPS-denied environments:

1. Low-Light and Night Operations

Trained on visible, near-infrared and long-wave infrared imagery, OMNInav ensures reliable navigation regardless of lighting conditions.

Figure 5. OMNInav’s agnostic modality capability enables navigation at night in complete darkness.

2. Seasonal and Environmental Changes

Handles vegetation growth, snow cover, and landscape alterations using its robust AI models trained on multi-year satellite imagery

Figure 6. OMNInav is robust, handling seasonal variations from lush greenery to snow-covered terrain.

3. Man-Made Environmental Transformations

Adapts to dynamic environments, such as post-construction zones or conflict-affected areas, ensuring continuous navigation accuracy

Figure 7. OMNInav accurately registers navigation points despite extensive urban damage.

Performance and Real-World Applications

OMNInav demonstrates exceptional performance across diverse scenarios:

• Drift-Free navigation: Maintains positional accuracy over extended distances

• Adaptability to Outdated Imagery: Reliable localization even with datasets up to 10 years old

• Compatibility Across Modalities: Works seamlessly with visible, near-infrared, and thermal imaging sensors

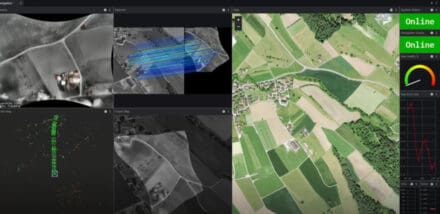

Figure 8. OMNInav navigating complex farmland patterns.

Competitive Advantage

OMNInav’s unique multi-modal design ensures it outperforms competitors in GPS-denied environments by:

• Surpassing Single-Modality Systems: Combines SLAM and AI-driven feature matching to overcome the limitations of traditional approaches like optical flow, VIO, or basic feature-based localization

• Cost-Effective Alternative to Military-Grade Systems: Offers military-grade reliability without the prohibitive costs, bulks, or calibration demands of high-end inertial navigation systems

• Excelling in GPS-Spoofing Scenarios: Fully bypasses GPS reliance, making it indispensable in regions like Ukraine where GPS spoofing and jamming are prevalent

A Game-Changer in Drone Navigation

OMNInav is redefining the standards for GPS-denied navigation with:

• Seamless integration into existing systems

• Superior adaptability to environmental changes

• Industry-leading accuracy in autonomous operations

To further enhance UAS capabilities, OKSI also offers OMNIlocate, a solution for high-accuracy (CAT I/II) target localization using standard gimbaled sensors.

Ready to take your unmanned systems to the next level?

Discover how the OMNISCIENCE suite can revolutionize your operations with advanced, modular solutions for GPS-denied navigation, tracking, target geolocation, and more. Whether you’re planning complex missions or navigating challenging terrains, OKSI has the tailored tools you need.

Meet with the OKSI team at SHOT Show 2025 January 20-24 at the Venetian Las Vegas. Contac us to schedule a meeting

Contact Us

Figure 9:Highlights the OMNISCIENCE suite, including OMNInav and other advanced modules designed for unmanned systems and munitions.

Email: [email protected]

Website: oksi.ai/contact

Learn more: oksi.ai/omniscience

Explore the full range of OMNISCIENCE modules and cutting-edge technologies from OKSI. Watch our video seriesto see how we’re redefining drone autonomy for both defense and commercial applications.

OMNISCIENCE

OMNInav

OMNIseek

You can skip to the end and leave a response. Pinging is currently not allowed.

Read the full article here